In my work measuring digital advertising campaigns, I often see how quickly the landscape shifts—driven by advances in transformers and deep learning. These changes make it important to pause and assess which innovations are most relevant to improving outcomes for advertisers and platforms. The Deep Interest Network (DIN) paper caught my attention as a practical and meaningful contribution. Using it as a starting point, I explored the key developments that led here, considered where DIN fits into the broader CTR prediction landscape, and reflected on possible directions for future progress.

Click-through rate (CTR) prediction is the lifeblood of modern digital advertising and recommendation systems. Whether it’s deciding which product to show on Amazon, which video to recommend on YouTube, or which ad wins in a real-time bidding (RTB) auction, CTR estimates directly affect both user experience and revenue.

While CTR prediction has been studied for years, the Deep Interest Network (DIN)—introduced by Alibaba Group researchers—brings a fresh perspective to a long-standing challenge: how to model diverse and context-dependent user interests effectively.

Why CTR Prediction Is Hard

CTR prediction systems face three major challenges:

- Extreme Sparsity in Data

Most user and item attributes are categorical and extremely sparse. In datasets like Criteo’s, the feature space can have tens of millions of dimensions with >99.99% sparsity. Simple models like logistic regression struggle to generalize in such conditions. - Complex Feature Interactions

User behavior patterns often depend on high-order interactions—e.g.,{Gender=Male, Age=10, ProductCategory=VideoGame}—which can’t be captured by looking at features in isolation. Manually engineering these interactions is labor-intensive and incomplete. - Context Dependence of User Interests

A single user’s browsing history may span multiple categories (e.g., handbags, jackets, electronics). Not all interests are equally relevant to every ad impression.

Modeling Approaches

From Embeddings to Interest Modeling

The first step in modern CTR models is to convert high-dimensional categorical features into dense, low-dimensional embeddings—similar to how NLP models embed words. This reduces dimensionality and captures latent structure (e.g., product category similarity).

In standard models, these embeddings are pooled (often averaged) into a single user vector and fed into a prediction network. While effective, this approach compresses all user interests into one fixed-length vector, losing granularity.

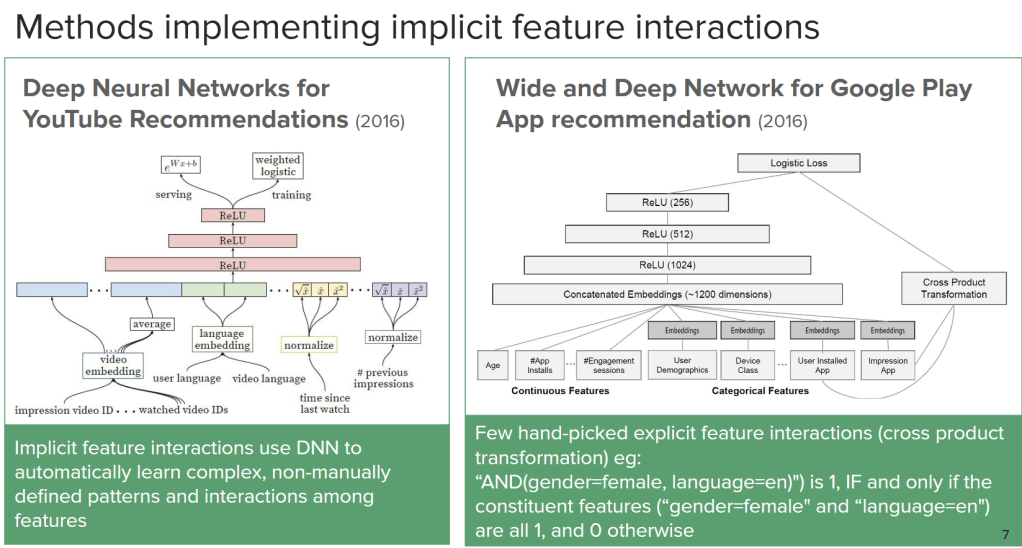

Implicit vs. Explicit Feature Interaction Approaches

Industry research has pursued two main paths:

- Implicit Interaction Models – Let deep neural networks discover feature interactions automatically.

Examples:- Wide & Deep (Google Play)

- YouTube’s recommendation DNN

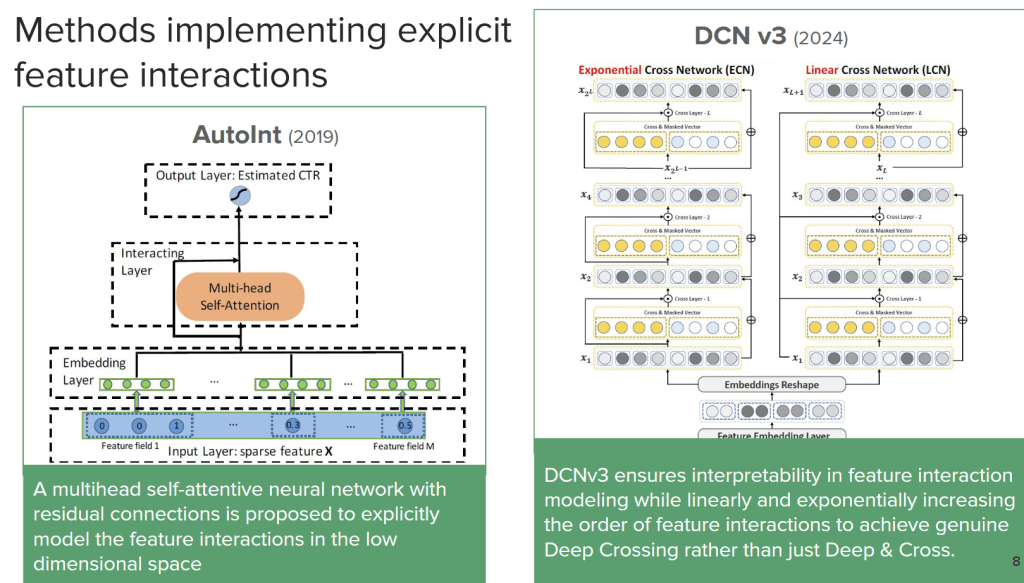

Explicit Interaction Models – Model and expose feature interactions explicitly for interpretability.

Examples:

- AutoInt (multi-head self-attention for feature interaction)

- DCNv3 (deep cross networks with interpretable crossing layers)

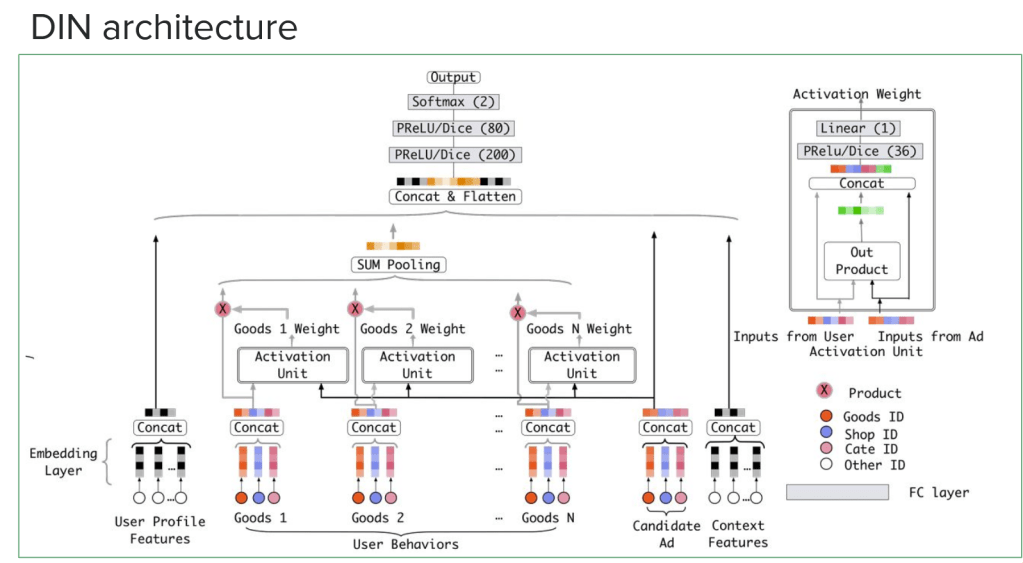

Deep Interest Network

Limitations of Fully Connected Implicit Interaction Models

Fully connected implicit interaction models can only handle fixed-length inputs. Diverse interests of the user are compressed into a fixed-length vector (of manageable size), which limits model expressive ability. It is also not necessary to compress all the diverse interests of a certain user into the same vector when predicting a candidate ad because only part of the user’s interests will influence their action (to click or not to click).

DIN’s Key Innovation: Local Activation Unit

As an implicit interaction model, DIN builds on the approaches discussed above but takes them a step further by directly addressing the context-dependence problem. It does this through a local activation unit, which adaptively weighs a user’s historical behaviors according to their relevance to the current ad.

- For a t-shirt ad, clothing-related behaviors get more weight.

- For a smartphone ad, electronics-related behaviors dominate.

Unlike traditional attention in transformers, DIN’s activation weights are not normalized, allowing stronger emphasis on dominant interests.

This produces a context-specific user interest vector, enabling the model to focus on the subset of historical interactions most likely to influence the click decision.

Training Techniques That Make It Work at Scale

Alibaba’s production environment involves 60M users and 0.6B products, so efficiency matters.

- Mini-batch Aware Regularization – Applies L2 regularization only to features present in the current mini-batch, avoiding unnecessary computation on inactive features.

- Dice Activation – A data-adaptive activation function that shifts its rectification threshold based on the input distribution, improving stability across layers.

Results: Tangible Gains at Alibaba

During almost a month’s A/B testing in Alibaba (2017):

- +11% weighted AUC compared to the base DNN.

- +10% CTR and +3.8% revenue per mille (RPM) in a month-long A/B test.

These gains led to DIN’s deployment across Alibaba’s large-scale display advertising system.

Visualization Insights

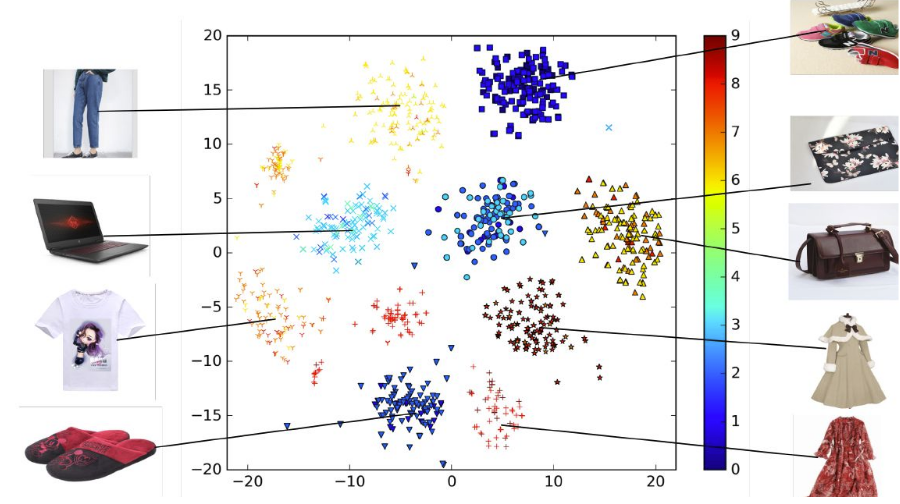

A t-SNE visualization of learned embeddings showed (Points with same shape correspond to ads in the same category. Color indicates CTR for each ad.):

- Items from the same category naturally clustered together—despite no explicit category supervision.

- Click probabilities varied smoothly within these clusters, showing the model’s nuanced interest mapping.

Why DIN Matters

DIN’s contribution goes beyond Alibaba’s needs. It illustrates a broader principle for recommender and ranking systems:

User interests should be modeled in a context-dependent, dynamic way rather than compressed into a single static profile.

From my perspective in digital ad measurement, DIN represents a solid step toward more context-aware personalization. It bridges current best practices with the potential of emerging approaches, hinting at a future where CTR models combine adaptiveness with greater interpretability. As transformer-based architectures and advanced attention mechanisms continue to evolve, I expect DIN’s core ideas to remain central to shaping the next generation of advertising intelligence.y.

References

- Zhou et al., Deep Interest Network for Click-Through Rate Prediction, KDD 2018.

- Song et al., AutoInt: Automatic Feature Interaction Learning via Self-Attentive Neural Networks, 2019.

- Wang et al., DCNv3: Towards Next Generation Deep Cross Network for Click-Through Rate Prediction, 2024.

- Cheng et al., Wide & Deep Learning for Recommender Systems, 2016

- Covington et al., Deep Neural Networks for YouTube Recommendations, 2016

Leave a comment